LAMA - Linked Annotations for Media Analysis

LAMA is the name of the analysis tool for audiovisual media that was developed by the research project "Telling Sounds". The tool was designed to facilitate the analysis of audiovisual resources ("clips"), to help contextualizing them and to make it easier to identify interconnections between the different clips. In the first step of the process of development the system was fed with meta data (URL, length, title, etc.). After that the clips were annotated. The annotations can include various topics, pieces of music, sounds and individuals ("entities") that are seen or heard in the clip. One feature of the tool is that by describing what one notices in the clip it is possible to distinguish between descripton and interpretation. This means one can specify whether something is unambigously noticeable in the clip or whether the topic, place etc. is merely implied, so that the person watching has to interpret it.

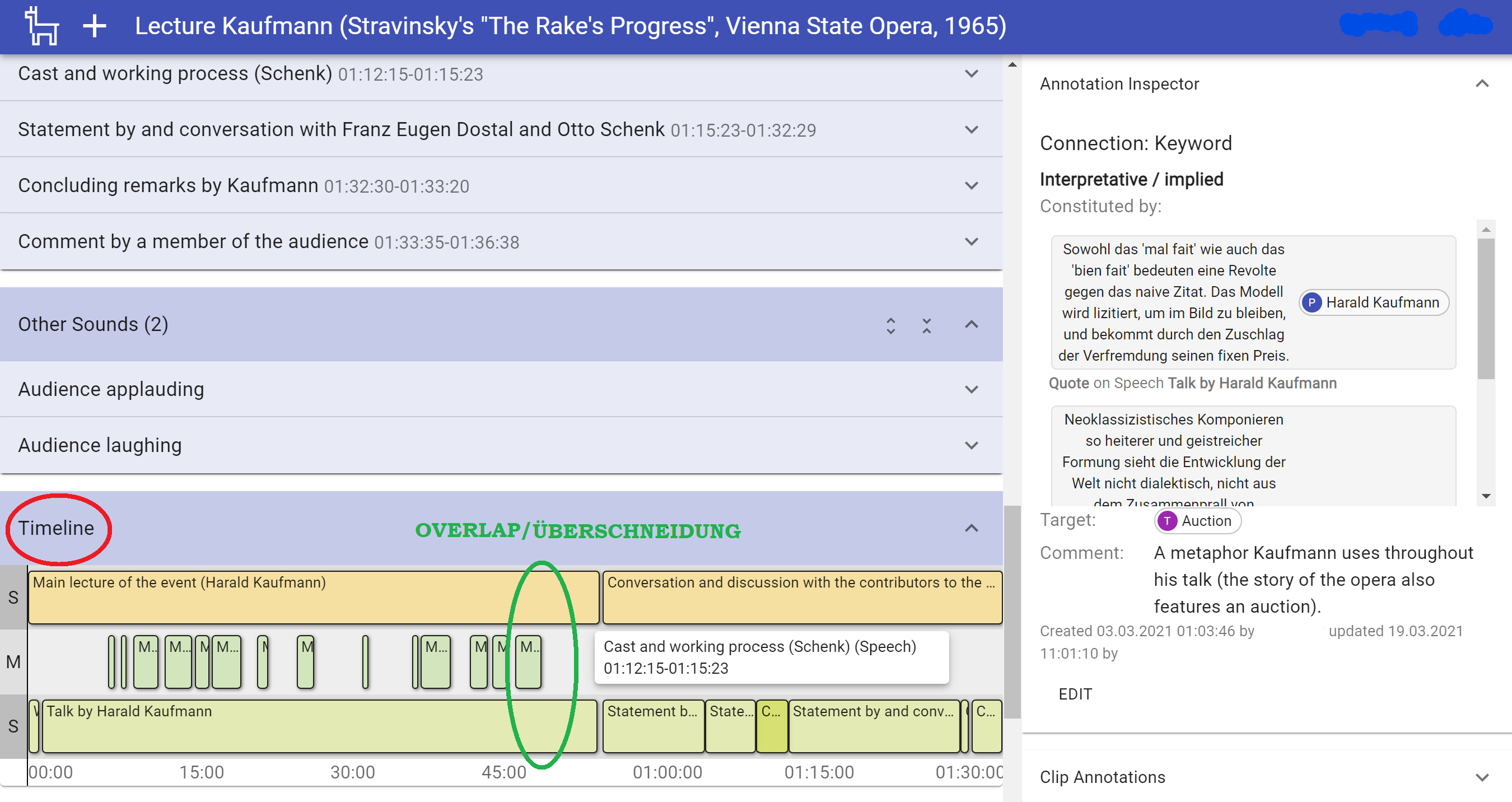

LAMA offers enough space to extensively characterize every entry as one pleases and write down all necessary references, notes and specifications. Futhermore, the tool makes it possible to add a timecode to each clip, so that the required sequence can be found quickly. In addition to that a timecode can be given to smaller subsections as well which then can be described in detail.

These features of the tool turn it into a very valuable analysis tool which enables researches to quickly identify which entities appear in which subsection and which entities appear together.

Another distinctive feature of LAMA is that it offers the possibility to pose complex queries. Questions that can be asked are for instance: Which entities occur together? To which topics was music added? This makes the research regarding very specific research questions easier and allows the researcher to work through much more material and sources in shorter time periods.

To provide a better overview of the different clips and findings the developers integrated a visual aid. Every section that was assigned a timecode can be depicted on a timeline. This enables the researcher to identify at a glance which annotations (for instance specific sounds, a certain piece of music or language) overlap in a certain clip. Simultaneousness is therewith easy to discern and one can easily spot intersections (see figure 1)

At the moment another feature is in development. To render it possible to identify interrelations between different entities the team works on a way to display these as "networks" within the tool. This means that in the future topics, places, individuals etc. can be linked so that bigger contexts can be uncovered and displayed.

Figure 1